Exploring Google's GameNGen: A Revolutionary AI Game Engine

Written on

Understanding the GameNGen Revolution

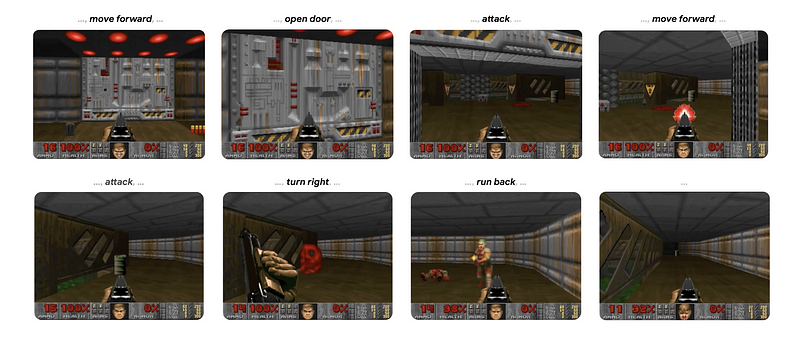

Imagine a digital universe orchestrated by an advanced AI, crafting fresh scenes and adventures for its users in real-time. Recent research from Google, released on ArXiv, has unveiled something astonishing—a groundbreaking model known as GameNGen, which serves as a fully neural network-driven game engine. This innovative system is capable of generating a complete, high-fidelity environment for a classic video game, Doom, allowing players to engage with it in real-time.

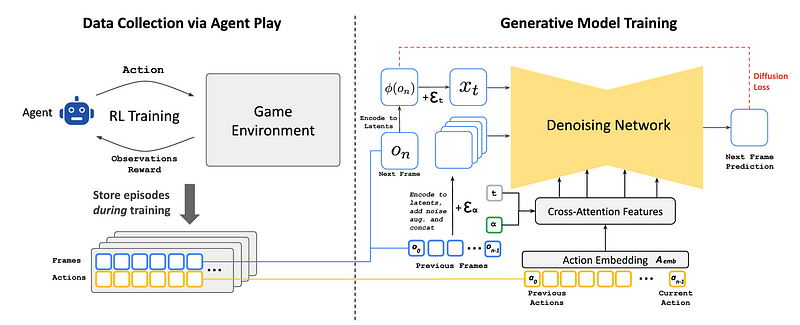

GameNGen employs Reinforcement Learning to train an AI agent to navigate the original game. This trained agent's gameplay is recorded and utilized to instruct a Diffusion model that creates game frames for real-time human interaction.

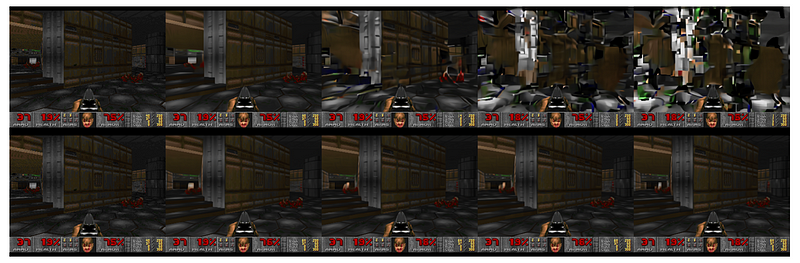

Incredible advancements have been made, as illustrated by a player engaging with Doom, entirely rendered in real-time by GameNGen at 20 frames per second. This exploration delves into the mechanics of this AI model, its training processes, and the revolutionary prospects it holds for the future of AI applications.

How Do Computer Games Function?

At the core of computer games lies a software structure known as the Game loop, which encompasses three fundamental tasks:

- Collect user input.

- Update the game status based on that input.

- Render the updated state on the user's display.

The Game loop operates at exceptionally high frame rates, creating an immersive and interactive experience. Its backbone is a Game engine, a collection of programmed rules that utilize a computer's hardware to emulate gameplay. Notably, this hardware can originate from various devices, not just traditional computers.

This prompts an intriguing question: If AI can substitute for a set of rules in numerous non-graphical tasks, why can’t it replace a Game engine?

Challenges in Replacing a Game Engine with AI

Numerous generative AI models are readily available today, particularly those based on the Diffusion model, such as:

- DALL-E and Stable Diffusion for Text-to-Image generation.

- Sora for Text-to-Video generation.

While simulating a video game might seem akin to rapid video generation, this is misleading. The process entails managing a player's dynamic interactions and adapting the game state accordingly in real-time. This adjustment requires intricate logic and physics computations that must occur on the fly.

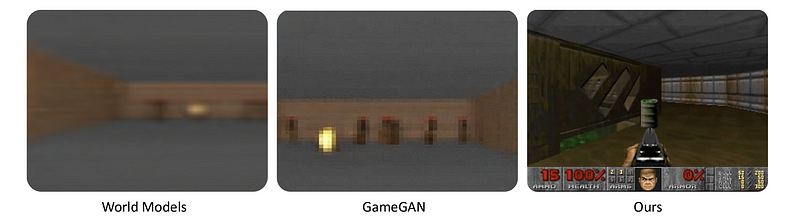

Moreover, each frame in a video game is generated based on previous frames and actions, making it difficult to maintain a long-term consistency in game states. This challenge has been evident in earlier research within this domain.

However, GameNGen has successfully tackled these challenges, enabling game generation comparable to how images and videos are created today.

Unpacking the GameNGen Model

Now, let’s delve into the intricacies of GameNGen's operation. This generative model leverages Reinforcement Learning. Picture an interactive environment (E) for a game like Doom, which comprises:

- Latent states (S): Representing the program's dynamic memory.

- Partial projections of these states (O): The rendered screen pixels.

- A Projection Function (V: S -> O): Mapping states to observations.

- A set of actions (A): Including keyboard presses and mouse movements.

- A Transition Probability Function (p): Governing how the game states evolve based on player actions.

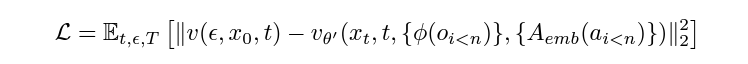

A simulation of this interactive world can be crafted using a Simulation Distribution Function (q) that produces future observations based on past data and actions. The aim is to minimize the gap between simulated and actual observations using a defined distance metric (D). An RL agent interacts with this environment, generating a trajectory of states, actions, and observations.

This information is harnessed to train a generative model utilizing the Teacher-forcing method, where the model learns to predict future observations based on actual sequences from the environment.

Exploring GameNGen's Training Process

The training of the RL agent is essential since collecting human gameplay data at scale can be a tedious task. Initially, an RL agent is trained to play the game in a manner akin to human players. This process employs the Proximal Policy Optimization (PPO) algorithm, utilizing a straightforward Convolutional Neural Network (CNN) to extract features from the game's frame images.

The training occurs on a CPU using the Stable Baselines 3 framework. Once trained, the agent interacts with the environment according to its RL policy, collecting data on its actions and observations, which is then used to instruct a generative diffusion model.

The generative model utilizes a pre-trained text-to-image diffusion model, Stable Diffusion v1.4, adjusted to generate images based on the agent's actions and observations rather than text.

Addressing Auto-Regressive Drift

During the Teacher-forcing training phase, the model learns from accurate environmental observations. However, during inference, it adopts an autoregressive approach, generating future observations based on its prior outputs. This can result in small errors compounding over time, a phenomenon known as Auto-regressive drift.

To mitigate this, a noise augmentation technique is implemented, incorporating various levels of Gaussian noise to input frames during training, thereby enhancing the model's ability to correct for Auto-regressive drift.

Fine-Tuning the Stable Diffusion Latent Decoder

Another source of noise comes from the pre-trained auto-encoder of Stable Diffusion v1.4, which compresses pixel patches into latent channels. This can introduce significant noise, particularly in the game's Heads-Up Display (HUD), which contains critical gameplay information. This issue is addressed by fine-tuning the decoder of the auto-encoder using Mean Squared Error (MSE) loss, comparing the output to the original frames.

Evaluating GameNGen's Performance

GameNGen demonstrates exceptional simulation quality, with human evaluators only slightly better than random chance at distinguishing between clips from the simulation and the actual game. Given short random clips from both the simulation and the real game side by side, they accurately identify the actual game approximately 60% of the time.

Currently, GameNGen can simulate high-quality real-time gameplay at 20 frames per second, albeit with limited memory access to roughly 3 seconds of game history. This limitation poses challenges for maintaining consistent game logic over extended periods. Nevertheless, this pioneering work paves the way for a future where games—potentially entire virtual environments—are defined by neural model weights rather than conventional code.

What are your thoughts on the possibilities this research presents? Feel free to share your insights in the comments below.

Further Reading

- Research paper titled ‘Diffusion Models Are Real-Time Game Engines’ published in ArXiv.

- Cameron R. Wolfe, Ph.D.’s article titled ‘Basics of Reinforcement Learning for LLMs’.

For updates on my work, consider subscribing:

- Subscribe to Dr. Ashish Bamania on Gumroad

- Byte Surgery | Ashish Bamania | Substack