The Future of AI: Navigating the Controversial SB-1047 Bill

Written on

Chapter 1: Understanding SB-1047

The SB-1047 bill has raised significant concerns within Silicon Valley, prompting a critical examination of its potential impact on the future of artificial intelligence. As it stands, this legislation, awaiting Governor Gavin Newsom's signature, could fundamentally alter the landscape of AI in California. Some view it as a necessary step forward, while others deem it a severe misstep since the advent of ChatGPT two years ago.

Introduced by Senator Scott Wiener in February 2024, the bill aims to impose regulations on advanced AI technologies, inspired by President Biden's Executive Order on Artificial Intelligence. Wiener characterizes the legislation as a means to ensure the responsible development of large-scale AI systems by establishing straightforward and sensible safety standards for developers.

Although I am not well-versed in legal matters, I found the bill accessible enough to understand. I encourage everyone, regardless of your expertise, to review its contents. Should it pass, it could serve as a model for future regulations both domestically and internationally. For those of you in California—representing about 25% of the audience—this is particularly relevant, but others can also gain insights from its provisions.

Here’s a concise overview of the key points that stand out:

Developers engaged in training or fine-tuning AI models that fall under this bill must perform thorough internal safety evaluations. This includes addressing "unsafe post-training modifications" for models that meet certain criteria before 2027: Those requiring over $100 million to train, those needing more than (10^2) FLOP of computing power, and models that cost over $10 million or require more than (3 times 10^2) FLOP for fine-tuning. Furthermore, derivatives of these models are also included.

Developers are required to establish comprehensive safety and security protocols, essentially crafting operation manuals for other developers and users. They must conduct annual assessments and, starting in 2026, engage third-party auditors while submitting yearly compliance statements.

A critical requirement is the implementation of a kill switch, allowing for the complete shutdown of covered models, taking into account the implications of such an action. The attorney general will be empowered to enforce compliance, taking legal action against non-compliant developers or those who fail to report incidents promptly.

Additionally, the bill strengthens protections for whistleblowers, which is increasingly necessary in today's climate.

If enacted, SB-1047 will dramatically reshape Silicon Valley, a hub for AI innovation. The rapid advancements and entrepreneurial spirit witnessed in recent years could be overshadowed by this legislation. Some companies may even consider relocating, although Wiener assures that the bill’s reach extends to all businesses operating in California.

Interestingly, the thresholds delineating whether an AI model is "covered" by the bill are relatively high, which may shield smaller developers and startups from its more burdensome requirements. Critics suggest that the law primarily targets the largest corporations, such as Google and Meta.

However, considering that models like GPT-4 already exceed the stipulated thresholds, some industry experts argue that the bill could hinder innovation overall.

Among the opponents of SB-1047 are key players like Google, Meta, and OpenAI. Initially, Anthropic expressed opposition but later adjusted its stance after proposing amendments, acknowledging that the updated bill's advantages might outweigh its drawbacks.

The major tech companies, who stand to gain the most from the current AI landscape, have expressed concerns over any law that could slow down their progress. While advocating for national regulations, they seem to oppose state-level legislation, which raises questions about their true intentions.

Bloomberg reported OpenAI's response to Wiener, emphasizing that comprehensive AI regulations should originate at the federal level rather than be fragmented across states. They argue that a cohesive set of policies would promote innovation and allow the U.S. to lead in establishing global standards, thus joining other AI entities in opposing SB-1047.

Wiener’s retort was pointed: if Congress fails to act, what is the alternative? He argued that SB-1047 is a reasonable measure demanding large AI labs to fulfill their existing commitments to ensure the safety of their expansive models.

But the discussions surrounding this bill extend beyond the industry’s interests.

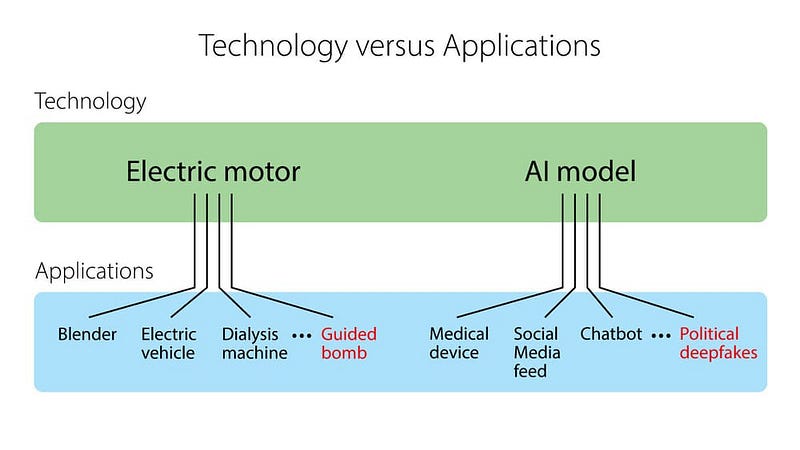

My approach is to consider the industry’s claims, but I reserve judgment until credible voices from academia express similar concerns. This has indeed occurred, as several academics have labeled the bill as “well-intentioned but misinformed,” citing that AI is a dual-use technology, capable of both beneficial and harmful applications.

Prominent figures like Fei-Fei Li, a Stanford professor, warn that if enacted, SB-1047 could jeopardize the nascent AI ecosystem, particularly disadvantaging public sector initiatives, academia, and smaller tech firms. Li argues that the bill may stifle innovation, penalizing developers and hampering academic research without effectively addressing its intended objectives.

Andrew Ng, another esteemed Stanford professor, pointed out the unreasonable "hazardous capability" designation that could hold AI builders liable for misuse of their models, a burden he believes is unrealistic for developers to navigate.

Chapter 2: The Industry Response

As the debate continues, various stakeholders express differing opinions on the necessary balance between regulation and innovation.

The first video highlights reactions from small businesses regarding California's SB 1047, providing insights into the local economic implications of the bill.

The second video discusses California's approach to AI through SB 1047, shedding light on the regulatory landscape and its potential impact on the tech industry.

In summary, the conversation surrounding SB-1047 is complex, filled with nuanced perspectives that resist straightforward conclusions. It ultimately raises two pivotal questions: Should the state influence the trajectory of technological innovation? And should our focus be on innovation or safety?